|

My current research focuses on geometry processing using deep learning. I am also interested in other relevant research topics in 3D vision and graphics. Previously, I have worked on non-rigid 3D reconstruction, 3D scene editing, and autonomous driving perception. Email / CV / Google Scholar / Github / LinkedIn / X / Bluesky |

|

|

|

|

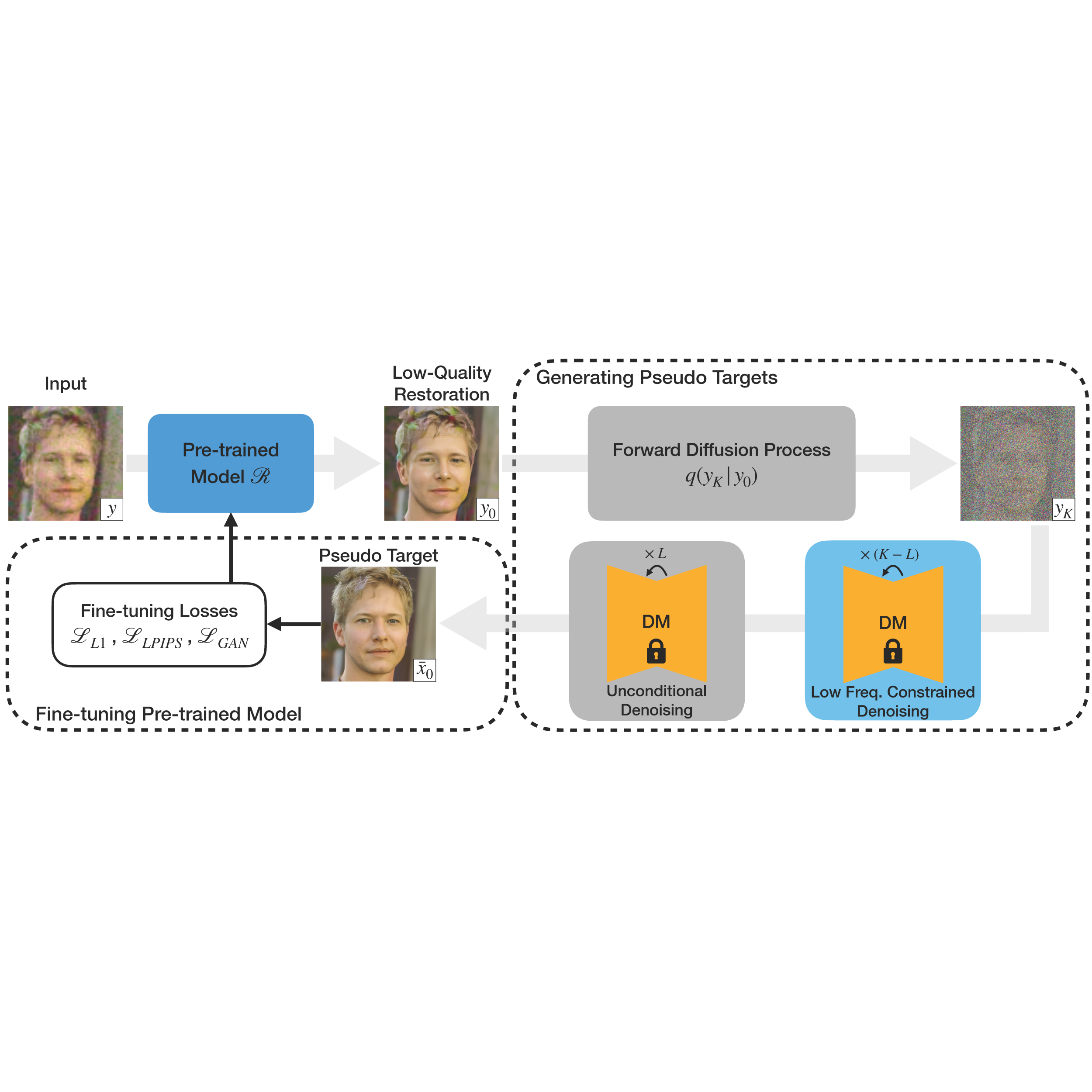

Tianshu Kuai, Sina Honari, Igor Gilitschenski, Alex Levinshtein WACV 2025 (Oral) project page / arXiv / code / data |

|

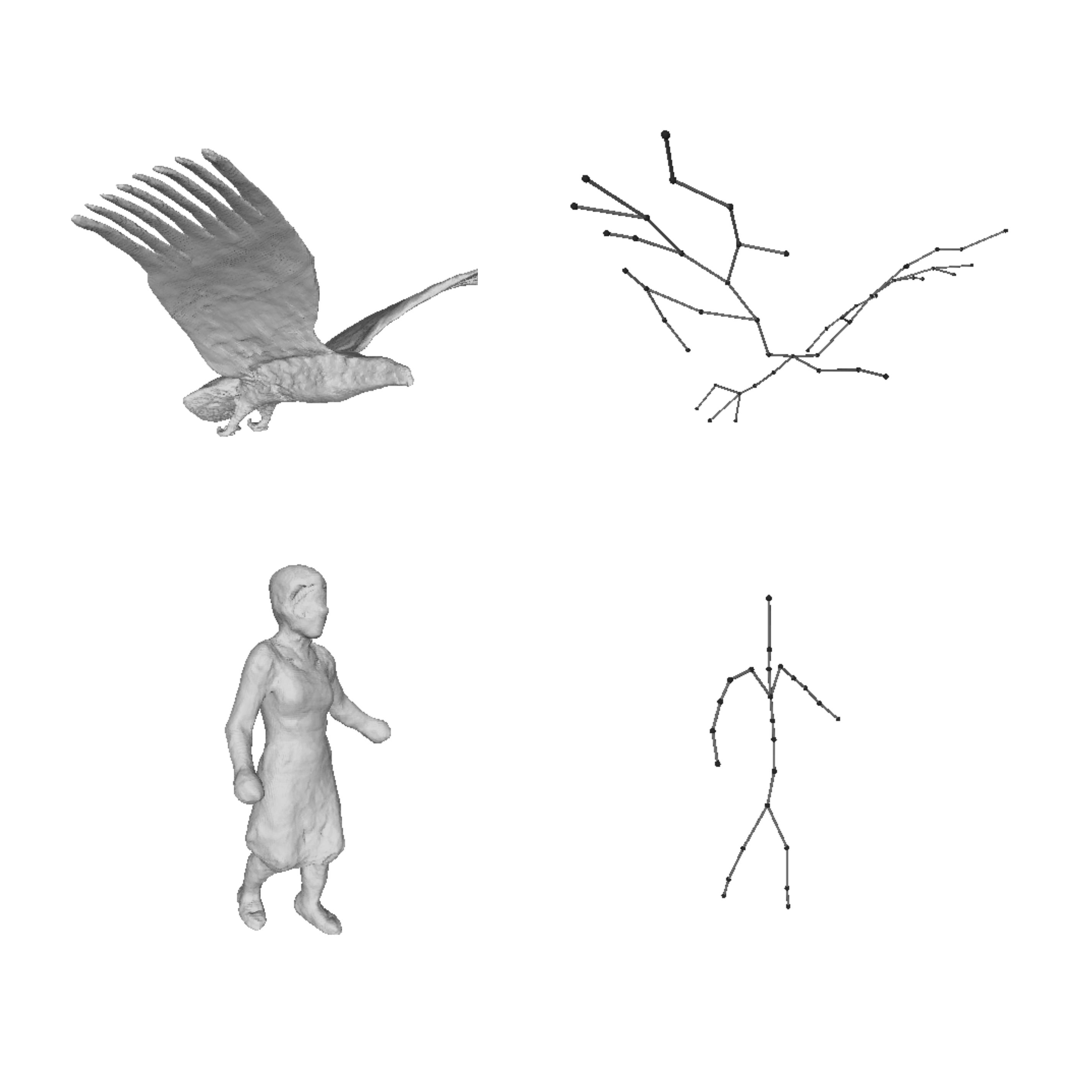

Tianshu Kuai, Akash Karthikeyan, Yash Kant, Ashkan Mirzaei, Igor Gilitschenski CVPRW 2023 project page / arXiv / code / data |

|

Anas Mahmoud, Jordan S.K. Hu, Tianshu Kuai, Ali Harakeh, Liam Paull, Steven L. Waslander CVPR 2023 arXiv / code |

|

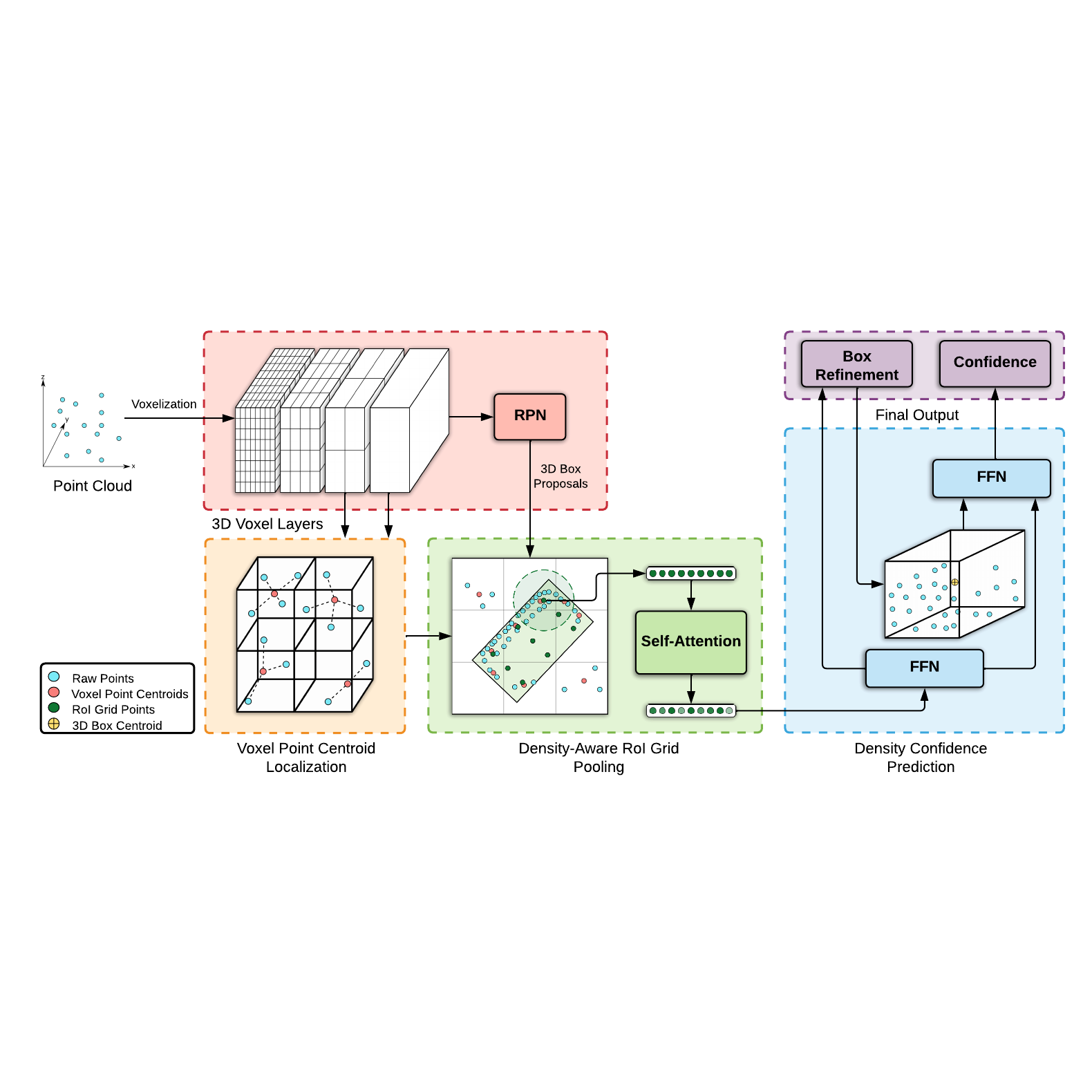

Jordan S.K. Hu, Tianshu Kuai, Steven L. Waslander CVPR 2022 arXiv / code |

|

|

|

Samsung AI Center Toronto May 2023 - April 2024 | Toronto, ON |

|

Qualcomm May 2020 - May 2021 | Toronto, ON |

|

|

|

|